Single-Node Cloud Resilient Deployment Using AWS

A Helm Chart (GitHub, ArtifactHub) is available for our resiliency and high-availability deployment options. Be sure to read the deployment instructions in the associated README file before using the chart.

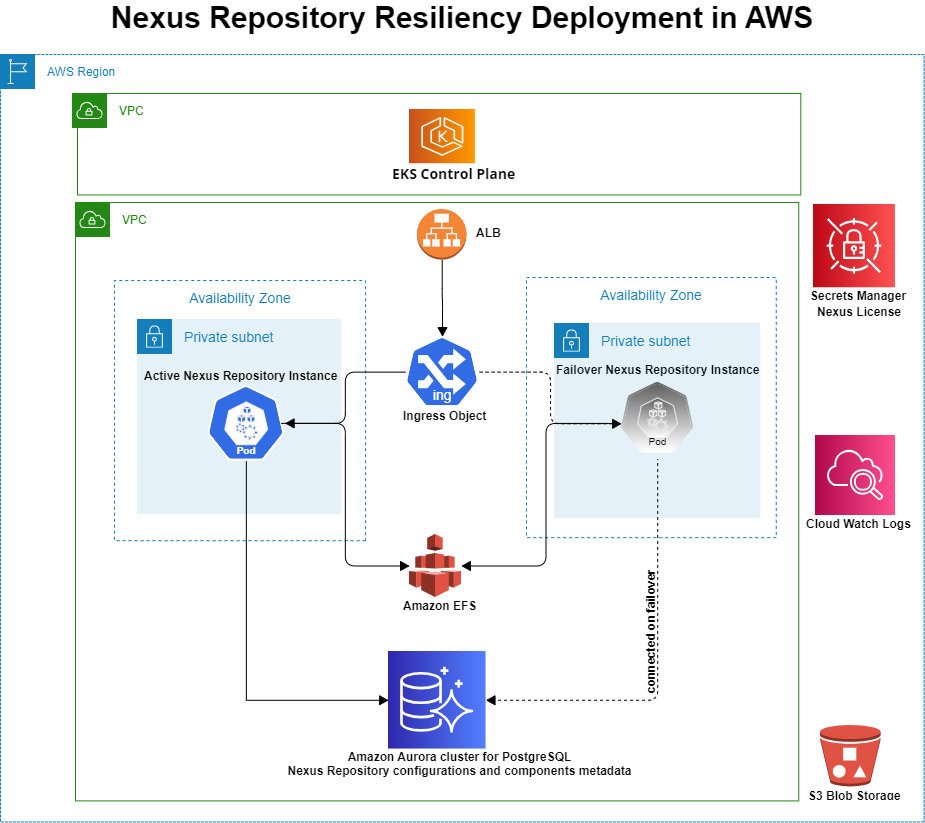

This section provides instructions for setting up Nexus Repository in Amazon Web Services (AWS) aligned to the following deployment model.

Use Cases

This reference architecture is designed to protect against the following scenarios:

An AWS Availability Zone (AZ) outage within a single AWS region

A node/server (i.e., EC2) failure

A Nexus Repository service failure

You would use this architecture if you fit the following profiles:

You are looking for a resilient Nexus Repository deployment option in AWS to reduce downtime.

You would like to achieve automatic failover and fault tolerance as part of your deployment goals.

You already have an Elastic Kubernetes Service (EKS) cluster set up as part of your deployment pipeline for your other in-house applications and would like to leverage the same for your Nexus Repository deployment.

You have migrated or set up Nexus Repository with an external PostgreSQL database and want to fully reap the benefits of an externalized database setup.

You do not need High Availability (HA) active-active mode.

This model is compatible with the Community Edition. The included Helm chart are only available for Pro deployments, however they may be manually adjusted for CE deployments.

Requirements

To set up an environment like the one illustrated above and described in this section, you will need the following:

Nexus Repository 3.33.0 or later

An AWS account with permissions for accessing the following AWS services:

Elastic Kubernetes Service (EKS)

Relational Database Service (RDS) for PostgreSQL

Application Load Balancer (ALB)

CloudWatch

Simple Storage Service (S3)

Secrets Manager

Limitations

In this reference architecture, a maximum of one Nexus Repository instance is running simultaneously. Having more than one Nexus Repository instance replica will not work.

Setting Up the Architecture

Note

Unless otherwise specified, all steps detailed below are still required if you are planning to use the HA/Resiliency Helm Chart (GitHub, ArtifactHub).

Step 1 - AWS EKS Cluster

Nexus Repository runs on an AWS EKS cluster spread across two or more AZs within a single AWS region. You can control the number of nodes by setting the min, max, and desired nodes parameters. EKS ensures that the desired number of Nexus Repository instances runs within the cluster. If something causes an instance or the node to go down, AWS will spin up another one. If an AZ becomes unavailable, AWS spins up new node(s) in the secondary AZ.

Begin by setting up the EKS cluster in the AWS web console, ensuring your nodes are spread across two or more AZs in one region. The EKS cluster must be created in a virtual private cloud (VPC) with two or more public or private subnets in different AZs. AWS provides instructions for managed nodes (i.e., EC2)in their documentation.

Note

This validation section describes AWS products, field names, and UI elements as documented at the time of writing. Note that AWS may change labels or UI elements over time, and these validation steps may not be updated immediately.

1. Validate the VPCs, subnets, and their network connections in the AWS VPC console under VPC → Your VPCs → <Your VPC Name>. Consider AWS's best practices for VPCs and subnets:

2. In the AWS IAM console, validate that your nodegroup has an IAM role that has the following policies assigned (See AWS IAM Roles documentation):

AmazonEKSWorkerNodePolicy

AmazonEC2ContainerRegistryReadOnly

AmazonEKS_CNI_Policy

AmazonS3FullAccess (for S3 access)

If you want to use CloudWatch to stream logs, add the below policies

CloudWatchFullAccess

CloudWatchAgentServerPolicy (to use AmazonCloudWatchAgent on servers)

3. In the AWS IAM console under IAM → Roles → <Your EKS Cluster Role>, ensure that the EKS cluster's role has AmazonEKSClusterPolicy permissions listed in the Permissions tab.

4. Ensure that the role above is attached to the EKS cluster by checking the Cluster IAM role ARN section under EKS → Clusters → <cluster-name> in the AWS IAM console.

5. Ensure that the cluster security group allows traffic into the cluster under the Inbound rules tab located in the VPC → Security Groups section of the AWS console.

6. Each Sonatype Nexus Repository instance requires at least 4 CPUs and 8GB memory. Ensure the EC2 nodes for the nodegroup are configured appropriately.

7. Ensure that there are a sufficient number of IP addresses available in the two subnets to account for the cluster, nodes, and other Kubernetes resources.

8. Ensure that the EKS API server endpoint is set to your desired setting: Public, Private, or both. This decision should be based on your requirements for exposing the EKS cluster outside of the VPC. FollowAWS documentationto control access to EKS clusters.

9. Enable DNS resolution and DNS hostnames for the VPC in the DNS settings section.

10. Under Security → Network ACLs → <Your-NACL>, ensure that the Network ACL's inbound and outbound rules allow traffic into and from the subnets. (See the AWS NACL documentation.)

11. Ensure that IAM OIDC identity provider for your cluster exists by using the following command:

aws iam list-open-id-connect-providers | grep $oidc_id | cut -d "/" -f4

If it does not exist, use the command below to create an IAM OIDC identity provider:

eksctl utils associate-iam-oidc-provider --cluster <cluster-name> --approve

If eksctl is not installed, create the IAM OIDC provider using the AWS web console from IAM → Identity providers.

12. In the AWS EKS console under EKS → Clusters → <Cluster-Name> → Add-ons, check that you have installed the add-ons below and that you are using versions that are compatible with your EKS version:

13. In the AWS CLI, run the following command; it should return the cluster information.

aws eks describe-cluster --name <cluster-name> --region <region-code>

14. Run the kubectl command below to ensure that nodes are created:

kubectl get nodes

15. In the AWS console under EC2 → Auto Scaling → Auto Scaling groups, ensure that the auto scaling group exists for the EKS cluster and has the desired number of nodes and AZs; this will have been created automatically with the EKS cluster. See the AWS auto scaling groups documentation.

Step 2 - Elastic File System Dynamic Provisioning

In this step, create an Elastic File System (EFS) and a service account with an IAM policy to allow the cluster to use EFS storage. Install the EFS CSI driver to create access points for accessing EFS. EFS storage is required to persist files required by the Nexus Repository pods when Kubernetes attempts to restart a pod in a different availability zone.

Follow the AWS documentation to create an EFS in the AWS console.

The EFS CSI driver creates an access endpoint when provisioning storage.

The VPC used by you EFS must be the same as the one accessing your EKS cluster.

Set the security group to allow incoming traffic on

port 2049from your EKS cluster security group. This is the security group used by all nodes in your EKS cluster.

Create an IAM policy using the AWS-provided example and saving it as

iam_policy.jsonSee Github file: iam-policy-example.json

Use the following command to create the policy after saving your file:

aws iam create-policy \ --policy-name EFSCSIControllerIAMPolicy \ --policy-document file://iam-policy.json

Take note of the policy URL as you will need it in the next step.

Create an IAM service account using a command like the following:

eksctl create iamserviceaccount \ --cluster=<cluster-name> \ --region=<region-name> \ --namespace=kube-system \ --name=efs-csi-controller-sa \ --override-existing-serviceaccounts \ --attach-policy-arn=arn:aws:iam::<aws-account-number>:policy/EFSCSIControllerIAMPolicy \ --approve

Install the EFS CSI driver via helm charts using the following command. Update the parameters to match your deployment.

helm repo add aws-efs-csi-driver https://kubernetes-sigs.github.io/aws-efs-csi-driver/ helm upgrade -i aws-efs-csi-driver aws-efs-csi-driver/aws-efs-csi-driver \ --namespace kube-system \ --set image.repository=602401143452.dkr.ecr.us-west-2.amazonaws.com/eks/aws-efs-csi-driver \ --set controller.serviceAccount.create=false \ --set controller.serviceAccount.name=efs-csi-controller-sa

Review the required updates to the

values.yamlbefore installing the Nexus Repository helm chart as documented in the README file.

Step 3 - Service Account

This is not required when using the HA Helm chart as this step is automatic.

The Kubernetes Service Account is associated with an IAM role containing the policies for access to S3 and AWS Secrets Manager. The statefulset uses this account when deploying Nexus Repository containers.

Run the Service Account YAML to establish the service account

Ensure that the IAM role has the

AmazonS3FullAccesspermissionsSet a trust relationship between the role and Kubernetes service account.

The trust relationship information is under IAM → Roles → Trust relationships in the AWS console.

Varify that the service account is the same one specified for the statefulset in the values.yaml

Learn more from the AWS documentation on how to use trust policies with IAM roles

Step 4 - AWS Aurora PostgreSQL Cluster

An Aurora PostgreSQL cluster containing three databases (one writer node and two replicas) spread across three AZs in the region where you've deployed your EKS provides an external database for Nexus Repository configurations and component metadata. We recommend creating these nodes in the same AZs as the EKS cluster.

AWS provides instructions on creating an Aurora database cluster in their documentation.

1. Ensure that the pg_trgm (trigram) module is installed as a plugin using the command below:

SELECT * FROM pg_extension;

2. Verify that the DB security group allows the cluster security group at port 5432.

3. Ensure you have created a custom DB Cluster Parameter group, adjusted the max_connections parameter, and assigned it to the DB cluster. See our Prerequisite Step: Adjust max_connections.

4. Connect to the PostgreSQL database as described in the AWS documentation for connecting to a DB cluster to validate that it is accessible with the hostname, ports, and credentials that you configured in the Kubernetes YAML files or Helm chart.

5. Log into PostgreSQL to validate that there is a database called "nexus" (or whatever preferred name you used when completing step 1 of Migrating to PostgreSQL).

Step 5 - AWS Load Balancer Controller

The AWS Load Balancer Controller allows you to provision an AWS ALB via an Ingress type specified in your Kubernetes deployment YAML file. This load balancer, which is provisioned in one of the public subnets specified when you create the cluster, allows you to reach the Nexus Repository pod from outside the EKS cluster. This is necessary because the nodes on which EKS runs the Nexus Repository pod are in private subnets and are otherwise unreachable from outside the EKS cluster.

Follow the AWS documentation to deploy the AWS LBC to your EKS cluster. If you are unfamiliar with the AWS Load Balancer Controller and Ingress, read the AWS blog on AWS Load Balancer Controller.

If you encounter any errors when creating a load balancer using the AWS Load Balancer Controller, follow the steps in AWS's troubleshooting knowledgebase article.

1. Navigate to IAM → Roles → <Load balancer role name> → Trust relationships in the AWS console. Ensure that the service account is associated with an IAM role and that there is a trust relationship defined between the IAM role and the service account. Follow the AWS documentation to validate the trust relationship. (Also see the AWS documentation on how to use trust policies with IAM roles.)

2. Run the command below to validate deployment creation:

kubectl get deployment -n kube-system aws-load-balancer-controller

If the deployment for the load balancer will not start, you may need to delete and recreate the load balancer.

3. If your ingress was not successfully created after several minutes, run the following command to view the AWS Load Balancer Controller logs. These logs may contain error message that you can use to diagnose issues with your deployment.

kubectl logs -f -n kube-system -l app.kubernetes.io/instance=aws-load-balancer-controller

4. Run the following command and ensure that the service account created has the AWS IAM role annotated (also see the AWS documentation on configuring role and service account):

kubectl describe sa aws-load-balancer-controller -n kube-system

If not annotated, use the following command:

kubectl annotate serviceaccount -n $namespace $service_account eks.amazonaws.com/role-arn=arn:aws:iam::$account_id:role/my-role

5. Ensure that the IAM role created has the AWSLoadBalancerControllerIAMPolicy permission. (See the AWS documentation on IAM roles for service accounts.)

6. Validate that the IAM service account is listed in the Cloudformation Stack under CloudFormation → Stacks in the AWS CloudFormation console. (See the AWS documentation on working with stacks.)

7. Validate that the ALB created appears under EC2 → Load balancers in the AWS console.

Step 6 - Kubernetes Namespace

Tip

If you plan to use the HA Helm Chart (GitHub, ArtifactHub), you do not need to perform the step below; the Helm chart will create this for you.

A namespace allows you to isolate groups of resources in a single cluster. Resource names must be unique within a namespace, but not across namespaces. See theKubernetes documentationabout namespaces for more information.

To create a namespace, use a command like the one below with the kubectl command-line tool:

kubectl create namespace <namespace>

Expand Validation Steps

Use the command below to validate namespace creation:

kubectl get ns <namespace>

Step 7 - Licensing

There are two options to use for license management:

AWS Secrets Manager - When using the HA/Resiliency Helm Chart or YAML files

Configure License in Helm Chart - When using the HA/Resiliency Helm Chart but not using AWS Secrets Manager

Licensing Option 1 - AWS Secrets Manager

AWS Secrets Manager stores the license and the database connection properties. During a failover, the Secrets Manager retrieves the license when the container starts.

As of Nexus Repository release 3.74.0, you have the option of using an external secrets operator rather than the secret store CSI driver. This is recommended since it supports several external secret stores.

External Secrets Operator (Recommended)

To use an external secrets operator, locate the external secrets portion of your values.yaml (shown in the code block below) and complete the steps detailed in the Helm chart README

externalsecrets:

enabled: false

secretstore:

name: nexus-secret-store

spec:

provider:

# aws:

# service: SecretsManager

# region: us-east-1

# auth:

# jwt:

# serviceAccountRef:

# name: nexus-repository-deployment-sa # Use the same service account name as specified in serviceAccount.name

# Example for Azure

# spec:

# provider:

# azurekv:

# authType: WorkloadIdentity

# vaultUrl: "https://xx-xxxx-xx.vault.azure.net"

# serviceAccountRef:

# name: nexus-repository-deployment-sa # Use the same service account name as specified in serviceAccount.name

secrets:

nexusSecret:

enabled: false

refreshInterval: 1h

providerSecretName: nexus-secret.json

decodingStrategy: null # For Azure set to Base64

database:

refreshInterval: 1h

valueIsJson: false

providerSecretName: dbSecretName # The name of the AWS SecretsManager/Azure KeyVault/etc. secret

dbUserKey: username # The name of the key in the secret that contains your database username

dbPasswordKey: password # The name of the key in the secret that contains your database password

dbHostKey: host # The name of the key in the secret that contains your database host

admin:

refreshInterval: 1h

valueIsJson: false

providerSecretName: adminSecretName # The name of the AWS SecretsManager/Azure KeyVault/etc. secret

adminPasswordKey: "nexusAdminPassword" # The name of the key in the secret that contains your nexus repository admin password

license:

providerSecretName: nexus-repo-license.lic # The name of the AWS SecretsManager/Azure KeyVault/etc. secret that contains your Nexus Repository license

decodingStrategy: null # Can be Base64

refreshInterval: 1hSecret Store CSI Driver Instructions

If you do not wish to use an external secrets operator, you can use the Secrets Store CSI driver. Follow the AWS documentation for Secrets Store CSI Drivers to mount the license secret, which is stored in AWS Secrets Manager, as a volume in the pod running Nexus Repository.

Note

Read all the instructions below to build a complete set of parameters to include in the commands.

When you reach the command for installing the Secret Store CSI Driver, include the --set syncSecret.enabled=true flag. This will ensure that secrets are automatically synced from AWS Secrets Manager into the Kubernetes secrets specified in the secrets YAML.

Only the AWS CLI supports storing a binary license file. AWS provides documentation for using a --secret-binary argument in the CLI.

The command will look as follows:

aws secretsmanager create-secret --name supersecretlicense --secret-binary fileb://license-file.lic --region <region>

This will return a response such as this:

{

"VersionId": "4cd22597-f0a9-481c-8ccd-683a5210eb2b",

"Name": "supersecretlicense",

"ARN": "arn:aws:secretsmanager:<region>:<account id>:secret:license-string"

}You will put the ARN value in the secrets YAML.

Note

If updating the license (e.g., when renewing your license and receiving a new license binary), you'll need to restart the Nexus Repository pod after uploading the license to the AWS Secrets Manager. The AWS CLI command for updating a secret is put-secret-value.

When you reach the command for creating an IAM service account, follow these additional instructions:

You must include two additional command parameters when running the command:

--role-onlyand--namespace <nexusrepo namespace>It is important to include the

--role-onlyoption in theeksctl create iamserviceaccountcommand so that the Helm chart manages the Kubernetes service account.

The namespace you specify to the

eksctl create iamserviceaccountmust be the same namespace into which you will deploy the Nexus Repository pod.Although the namespace does not exist at this point, you must specify it as part of the command. Do not create that namespace manually beforehand; the Helm chart will create and manage it.

You should specify this same namespace as the value of

nexusNsin your values.yaml.

Your command should look similar to the following where $POLICY_ARN is the access policy created in a previous step when you follow the AWS documentation.

eksctl create iamserviceaccount --name <sa-name> --region="$REGION" --cluster "$CLUSTERNAME" --attach-policy-arn "$POLICY_ARN" --approve --override-existing-serviceaccounts --role-only

Note that the command will not create a Service Account but will create an IAM role only.

Note

This section assumes you have installed the HA/Resiliency Helm Chart (GitHub, ArtifactHub) or Sample AWS YAMLs.

1. Ensure that the nodes in the EKS cluster have an IAM role with GetSecretValue and DescribeSecret permissions and that this role has a trust relationship with the service account (you can find the trust relationship information under IAM → Roles → Role Name → Trust relationships in the AWS console). (Also see the AWS documentation on how to use trust policies with IAM roles.)

2. Run the following command to validate that the IAM role for the Kubernetes service account exists:

eksctl get iamserviceaccount --cluster "$CLUSTERNAME" --region "$REGION"

3. Run the command below to validate that the service account for the EBS controller has the AWS IAM role annotated (also see the AWS documentation on configuring role and service account):

kubectl describe sa ebs-csi-controller-sa -n kube-system

If it is not annotated, use the command below:

kubectl annotate serviceaccount -n $namespace $service_account eks.amazonaws.com/role-arn=arn:aws:iam::$account_id:role/my-role

4. Using the command below, validate that the secrets were created when you installed the Helm chart or used the provided YAML file:

kubectl get secrets -n <namespace>

If they are missing, it is possible that the --syncSecret.enabled=true flag was not included with the Helm command for installing the Secret Store CSI Driver.

To fix this, uninstall the Helm chart and install it again with the --syncSecret.enabled=true flag.

Licensing Option 2 - Configure License in Helm Chart

This option is only for those using the HA/Resiliency Helm Chart but not AWS Secrets Manager.

license:

name: nexus-repo-license.lic

licenseSecret:

enabled: false

file: # Specify the license file name with --set-file license.licenseSecret.file="file_name" helm option

fileContentsBase64: your_license_file_contents_in_base_64

mountPath: /var/nexus-repo-licenseIn the

values.yaml, locate the license section as shown below.Change the

license.licenseSecret.enabledtotrueDo one of the following:

Specify your license file with the following:

--set-file license.licenseSecret.file="file_name"

Put the base64 representation of your license file in the below value:

license.licenseSecret.fileContentsBase64

Step 8 - AWS CloudWatch (Optional but Recommended)

Tip

If you plan to use the HA/Resiliency Helm Chart (GitHub, ArtifactHub), you do not need to perform this step; the Helm chart will handle it for you.

When running Nexus Repository on Kubernetes, it is possible for it to run on different nodes in different AZs over the course of the same day. In order to be able to access Nexus Repository's logs from nodes in all AZs, you must externalize your logs. We recommend that you externalize your logs to CloudWatch for this architecture; however, if you choose not to use CloudWatch, you must externalize your logs to another external log aggregator to make sure that the Nexus Repository logs survive node crashes or when pods are scheduled on different nodes as is possible when running Nexus Repository on Kubernetes. Follow AWS documentation to set up Fluent Bit for gathering logs from your EKS cluster.

When first installed, Nexus Repository sends task logs to separate files. Those files do not exist at startup and only exist as the tasks are being run. In order to facilitate sending logs to CloudWatch, you need the log file to exist when Nexus Repository starts up. The nxrm-logback-tasklogfile-override.yaml available in our sample files GitHub repository sets this up.

Once Fluent Bit is set up and running on your EKS cluster, apply the fluent-bit.yaml to configure it to stream Nexus Repository's logs to CloudWatch. The specified Fluentbit YAML sends the logs to CloudWatch log streams within a nexus-logs log group.

AWS also provides documentationfor setting up and using CloudWatch.

Note

This section assumes the following:

Helm-Based Deployments - You must have set the

fluentbit.enabledto "true" in values.yaml andinstalled the HA Helm Chart (GitHub, ArtifactHub).Non-Helm Deployments - You must have edited the fluentbit yaml as appropriate for your environment and applied it to your cluster.

1. Ensure that the IAM role assigned to your EKS node(s) has the following permissions:

CloudWatchFullAccessCloudWatchAgentServerPolicy

2. Ensure that the DaemonSet and Pods for the fluent-bit configuration are running using a command like the following:

kubectl get all -n amazon-cloudwatch

3. Log into the fluent-bit pod using a command like the following:

kubectl exec -ti --namespace amazon-cloudwatch <fluent-bit pod name> -- sh

4. Validate that the logs are being written to /var/log/containers.

Step 9 - External DNS (Optional)

If you are using or wish to use our Docker Subdomain Connector feature, you will need to use external-dns to create 'A' records in AWS Route 53.

You must meet all Docker Subdomain Connector feature requirements, and you must specify an HTTPS certificate ARN in Ingress YAML.

You must also add your Docker subdomains to your values.yaml.

Permissions

You must first ensure you have appropriate permissions. To grant these permissions, open a terminal that has connectivity to your EKS cluster and run the following commands:

Note

The commands below do not register a domain for you; you must have a registered domain before completing this step.

1. Use the following to create the policy JSON file.

cat <<'EOF' >> external-dns-r53-policy.json

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"route53:ChangeResourceRecordSets"

],

"Resource": [

"arn:aws:route53:::hostedzone/*"

]

},

{

"Effect": "Allow",

"Action": [

"route53:ListHostedZones",

"route53:ListResourceRecordSets"

],

"Resource": [

"*"

]

}

]

}

EOF2. Use the following to set up permissions to allow external DNS to create route 53 records.

aws iam create-policy --policy-name "AllowExternalDNSUpdates" --policy-document file://external-dns-r53-policy.json POLICY_ARN=$(aws iam list-policies --query 'Policies[?PolicyName==`AllowExternalDNSUpdates`].Arn' --output text) EKS_CLUSTER_NAME=<Your EKS Cluster Name> eksctl utils associate-iam-oidc-provider --cluster $EKS_CLUSTER_NAME --approve ACCOUNT_ID=$(aws sts get-caller-identity --query "Account" --output text) OIDC_PROVIDER=$(aws eks describe-cluster --name $EKS_CLUSTER_NAME --query "cluster.identity.oidc.issuer" --output text | sed -e 's|^https://||')

Note

The value you assign to the EXTERNALDNS_NS variable below should be the same as the one you specify in your values.yaml for namespaces.externaldnsNs.

EXTERNALDNS_NS=nexus-externaldns

cat <<-EOF > externaldns-trust.json

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Federated": "arn:aws:iam::$ACCOUNT_ID:oidc-provider/$OIDC_PROVIDER"

},

"Action": "sts:AssumeRoleWithWebIdentity",

"Condition": {

"StringEquals": {

"$OIDC_PROVIDER:sub": "system:serviceaccount:${EXTERNALDNS_NS}:external-dns",

"$OIDC_PROVIDER:aud": "sts.amazonaws.com"

}

}

}

]

}

EOF

IRSA_ROLE="nexusrepo-external-dns-irsa-role"

aws iam create-role --role-name $IRSA_ROLE --assume-role-policy-document file://externaldns-trust.json

aws iam attach-role-policy --role-name $IRSA_ROLE --policy-arn $POLICY_ARN

ROLE_ARN=$(aws iam get-role --role-name $IRSA_ROLE --query Role.Arn --output text)

echo $ROLE_ARNTake note of theROLE_ARNoutput last above and specify it in your values.yaml forserviceAccount.externaldns.role.

External DNS YAML

Tip

If you plan to use the HA Helm Chart (GitHub, ArtifactHub), you do not need to perform the step below; the Helm chart will create this for you.

After running the permissions above, run theexternal-dns.yaml.

Then, in theingress.yaml, specify the Docker subdomains you want to use for your Docker repositories.

Note

This section assumes the following:

Helm-Based Deployments - You must have set

externaldns.enabledto "true" in the values.yaml and installed the HA Helm Chart (GitHub, ArtifactHub).Non-Helm Deployments - You must have edited theexternal dns yamlas appropriate for your environment and applied it to your cluster.

1. Ensure that you have an IAM role associated with a service account with the AllowExternalDNSUpdates permission and a trust relationship between the role and service account (you can find the trust relationship information under IAM → Role → <Role Name> → Trust relationships in the AWS console). (Also see the AWS documentation on how to use trust policies with IAM roles.)

2. Ensure that A records are created in the Route 53 hosted zone for the required Docker subdomains. (See the AWS Route 53 documentation on working with hosted zones and their documentation on listing records.)

3. Use the AWS Test record function in Route 53 to validate that the Route 53 records for the subdomains are returning "No Error." (See the AWS Route 53 documentation for Checking DNS responses from Route 53.)

4. Ensure that the subdomain names you provided in the YAML filesor values.yaml (for Helm-based deployment) matches the subdomains that you want to configure in Sonatype Nexus Repository. This is the name you enter beside the Allow Subdomain Routing checkbox as documented in our Docker Subdomain Connector help documentation.

Step 10 - Dynamic Provisioning Storage Class

Run the storage class YAML. This storage class will dynamically provision EFS mounts for use by each of your Nexus Repository pods. You must make sure that you have an AWS EFS Provisioner installed in your EKS cluster.

Note that the Helm chart supports both EBS volumes and EFS mounts, but we strongly recommend using EFS. If you do use EBS volumes, you will need to use an EBS Provisioner.

In the AWS console, navigate to EC2 → Volumes. Ensure that the EFS mounts are provisioned. If they are not being provisioned, use the commands below to help determine possible causes:

Describe your pod

kubectl describe pod -n <your namespace> <pod name>

Get the persistent volumes in your cluster

kubectl get pv

Describe a persistent volume in your cluster associated with your Sonatype Nexus Repository deployment

kubectl describe pv <name of persistent volume>

Get the persistent volume claims in your cluster

kubectl get pvc -A

Describe a persistent volume claim in your cluster associated with your Sonatype Nexus Repository deployment

kubectl describe pvc -n <namespace> <name of persistent volume claim>

Step 11 - AWS S3

Located in the same region as your EKS deployment, AWS S3 provides your object (blob) storage. AWS provides detailed documentation for S3on their website.

1. In the AWS VPC console under VPC → Endpoints, ensure that you have a gateway endpoint for S3 in the VPC to avoid traffic having to go over the Internet. (See the AWS documentation on gateway endpoints.)

2. In the AWS VPC console under VPC → Route Tables, ensure that the route table for the subnets has a route added to the S3 gateway endpoint. (See the AWS documentation on configuring route tables.)

Starting Your Deployment

At this point, you should have completed all of the steps for setting up your environment before actually starting Sonatype Nexus Repository. The steps below will actually start your deployment.

If you are using the HA/Resiliency Helm chart, you do still need to complete these steps. You will then need to follow the additional instructions in the Helm chart's Readme file.

Step 1 - StatefulSet

Run the StatefulSet YAML to start your Nexus Repository Pods.

Step 1 Validation (Optional)

Expand Validation Steps

1. Use the following command to validate that there are four containers running within each pod for each replica:

kubectl get po -n <namespace>

2. Execute the command below to ensure that Sonatype Nexus Repository is running without error:

kubectl logs <pod-name-from-previous-step> -n <namespace> -c nxrm-app

Step 2 - Ingress YAML

Run the Ingress YAML to expose the service externally; this is required to allow you to communicate with the pods.

Step 12 Validation (Optional)

Expand Validation Steps

After running the YAML/Helm charts, use the following command to validate that the Ingress for the ALB was created

kubectl get ingress/ingress-nxrm-nexus -n <namespace>

The output should display the ALB's DNS name.

Sonatype Nexus Repository HA/Resiliency Helm Chart

Note

If you are using EKS version 1.23+, you must first install the AWS EBS CSI driver before running the HA/Resiliency Helm Chart (GitHub, ArtifactHub). We recommend using the EKS add-on option as described in AWS’s installation instructions.

Unless otherwise specified, all steps detailed above are still required if you are planning to use the HA Helm chart.

To use the HA/Resiliency Helm Chart (GitHub, ArtifactHub), after completing the steps above, use git to check out the Helm Chart repository. Follow the instructions in the associated Readme file.

Note

The Helm chart is set up to disable the default blob stores and repositories on all instances.

AWS YAML Order

For those not using a Helm chart, you must run your YAML files in the order below after creating a namespace:

Fluent-bit YAML(only required if using CloudWatch)

External-dns.yaml (Optional)

Ingress for Docker YAML (Optional)

Docker Services YAML (Optional)

Note

The resources that these YAMLs create are not in the default namespace.

The sample YAMLs are set up to disable the

defaultblob stores and repositories on all instances.