Change Repository Blob Store Task Performance Testing

Overview

The Admin - Change repository blob store task allows you to change a repository's blob store source. This can be helpful for moving from local volume blob storage to AWS S3, from one S3 bucket to another, etc.

Note

Due to known issues with OrientDB, the task is disabled for OrientDB deployments.

Disclaimers

Sonatype tested using the specified controlled scenarios so these results should only be used as a reference. You specific environment is likely to vary.

This testing should not be considered as functional testing. The functional validation only includes the basic path with the expected conditions.

Due to the time required for the task to complete, some information in this report comes from statistical calculations instead of literal results; the calculations are based on actual result samples.

Performance Testing for Hosted and Proxy Repositories

For full transparency and to help you plan for using the Admin - Change repository blob store task, we are providing performance testing information for the following common scenarios:

Changing a blob store from a local volume blob store to an AWS S3 blob store

Changing a blob store from one AWS S3 blob store to another S3 blob store

Changing a blob store from one local volume file blob store to another local volume file blob store in a different volume

Key Takeaways

Successful results were only achieved for Sonatype Nexus Repository deployments using PostgreSQL and H2 databases. There is a known and proven issue when using OrientDB that results in database failure when using this task to move anything more than 10GB. This is not due to an issue with the task but rather due to the number of database changes that must be made. We do not recommend using this task to transfer anything more than 10GB in OrientDB deployments; doing so may result in database corruption.

The following list illustrates scenario performance from best to worst:

local volume to local volume

local volume to S3 bucket

S3 bucket to local volume

S3 bucket to S3 bucket

The Admin - Change repository blob store task takes significant time to complete; plan accordingly before using the task.

Test Environment

The test environment was constructed as follows:

m5d.2xlarge EC2 nodes

16G maximum heap size for the JVM

local storage based on NVMe volumes

default network bandwidth for this EC2 node type

db.r5.xlarge RDS node type for Postgres executions

Testing used Raw format repositories.

As this feature does not directly relate to repository format, we expect that this approach reflects general behavior regardless of repository formats contained in the blob store.

Summary of Findings

Scenario | Database | Time to move 10 GB | Time to move 100 GB | Time to move 1000 GB |

|---|---|---|---|---|

PostgreSQL | + 7 minutes | + 2 hours | + 11 hours, 30 minutes | |

H2 | + 17 minutes | + 3 hours | + 30 hours | |

Orient | Not supported | Not supported | Not supported | |

PostgreSQL | ~4 hours | ~40 hours | + 2 weeks | |

H2 | ~4 hours, 20 minutes | +60 hours | + 3 weeks | |

Orient | Not supported | Not supported | Not supported | |

PostgreSQL | ~ 6 hours, 40 minutes | + 2 days, 12 hours | + 2 weeks, 5 days | |

H2 | + 8 hours | + 3 days, 6 hours | + 1 Month | |

Orient | Not supported | Not supported | Not supported | |

PostgreSQL | + 10 hours, 30 minutes | +5 days | + 1 month and a half | |

H2 | + 11 hours, 50 minutes | + 5 days | + 1 month, 3 weeks | |

Orient | Not supported | Not supported | Not supported |

Charts and Discussion for Scenarios When Moving 10GB

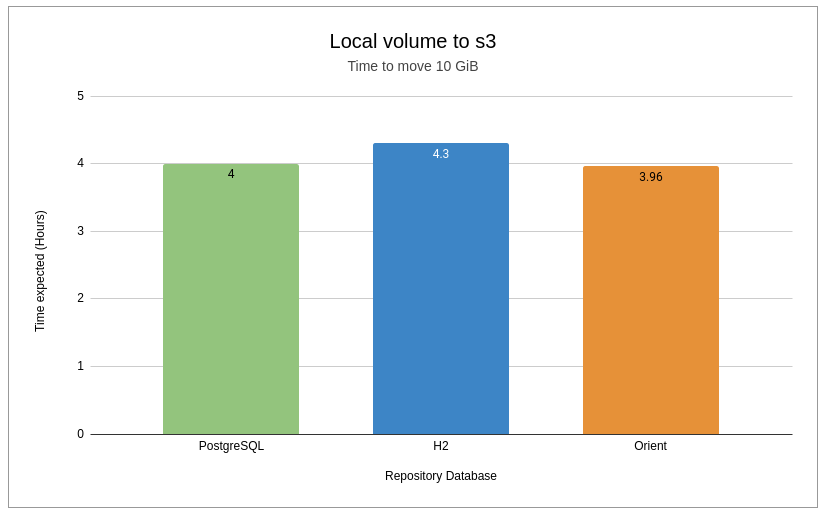

Local Volume to AWS S3

While OrientDB saw the best performance in this scenario, it is important to note that OrientDB is proven to fail with repositories exceeding 10GB. Therefore, those using OrientDB should not attempt to use this task on repositories exceeding 10GB.

PostgreSQL experienced a similar performance, and H2 performed the slowest.

|

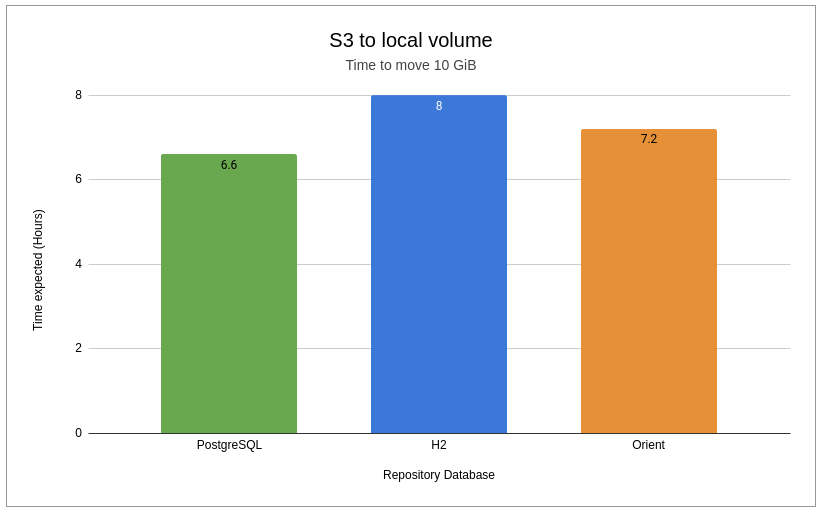

S3 to Local Volume

PostgreSQL performed best in this scenario with significant differences in H2 and OrientDB performance.

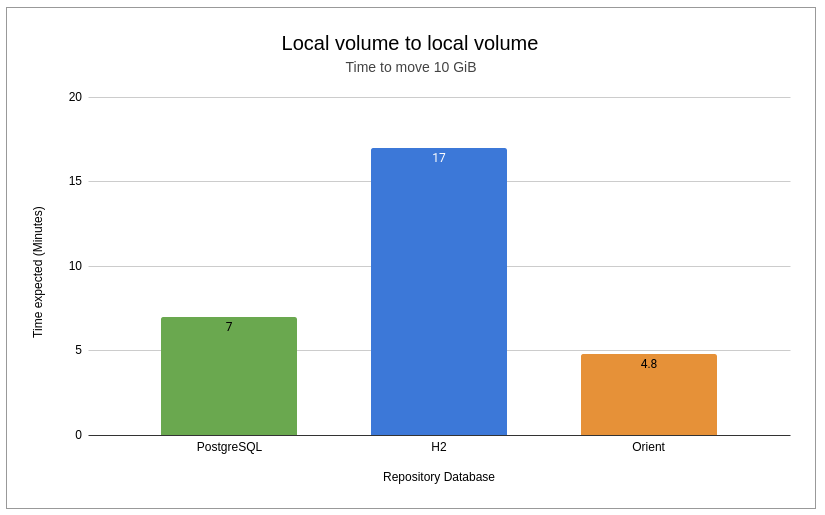

Local Volume to Local Volume

As anticipated, this scenario was the fastest for all databases. Please note that the scale below is in minutes rather than hours like the other charts.

While OrientDB experienced the best performance, we once again reiterate that OrientDB is proven to fail with repositories exceeding 10GB.

PostgreSQL performance was not very different from that of OrientDB. H2 did take more than twice the time to complete the task.

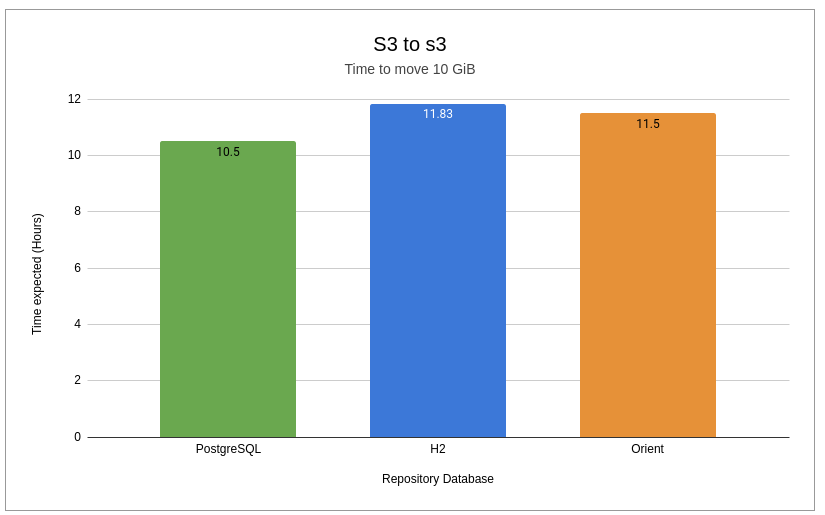

S3 to S3

AWS S3 to S3 was the slowest scenario observed; however, it is expected to be one of the most stable due to the S3 bucket's high availability. However, customers should consider the significant time it will take for the task to complete.

Performance Testing for Group Repositories

When running this task on a group repository, the task will only move metadata assets directly related to the group repository content id, not member repository content.

All test runs used S3 blob stores as both the source and destination.

The tested Sonatype Nexus Repository instance was using a PostgreSQL database.

Results Summary

Number of Blobs Moved | Time Elapsed |

|---|---|

7,958 | 58 minutes, 14 seconds |

158 | 1 minute, 36 seconds |

8,720 | 55 minutes, 10 seconds |