Single-Node Cloud Resilient Deployment Example Using Google Cloud

A Helm Chart (GitHub, ArtifactHub) is available for our resiliency and high-availability deployment options. Be sure to read the deployment instructions in the associated README file before using the chart.

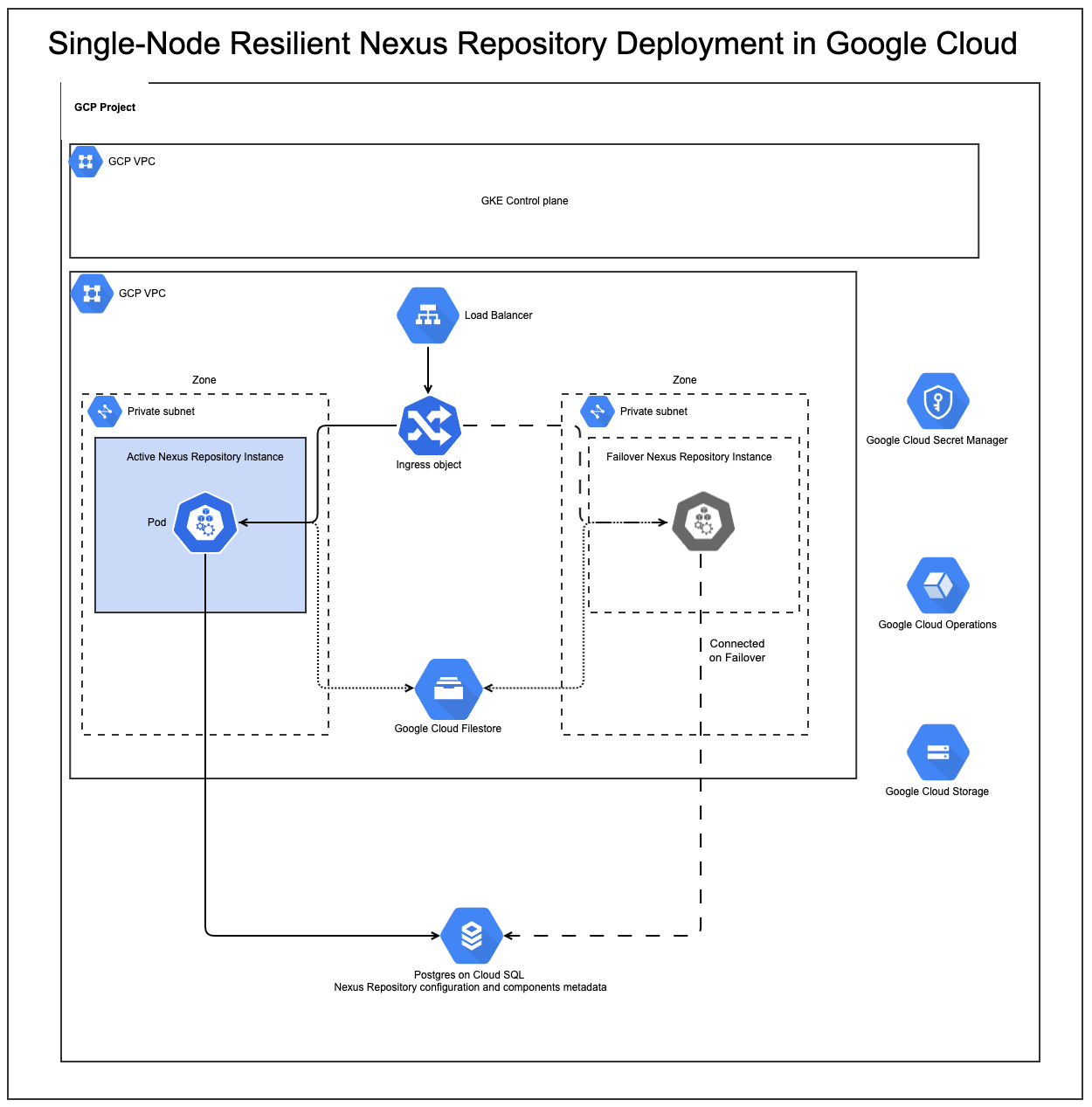

Ensure continuous delivery even in the face of disruptions. The steps below detail how to create a resilient Nexus Repository deployment on Google Cloud, leveraging Google Kubernetes Engine (GKE) and persistent storage to keep your artifacts available through outages.

If you already have a Nexus Repository instance and want to migrate to a resilient architecture, see our migration documentation.

Use Cases

This reference architecture is designed to protect against the following scenarios:

A Google Cloud Zone outage within a single region

A node/server failure

A Nexus Repository service failure

You would use this architecture if you fit the following profiles:

You are a Nexus Repository Pro user looking for a resilient Nexus Repository deployment option in Google Cloud to reduce downtime

You would like to achieve automatic failover and fault tolerance as part of your deployment goals

You already have a GKE cluster set up as part of your deployment pipeline for your other in-house applications and would like to leverage the same for your Nexus Repository Deployment

You have migrated or set up Nexus Repository with an external PostgreSQL database and want to fully reap the benefits of an externalized database setup

You do not need High Availability (HA) active-active mode

Requirements

A Nexus Repository Pro license

Nexus Repository 3.74.0 or later

kubectl command-line tool

A Google Cloud account with permissions for accessing all of the Google services detailed in the instructions below

Limitations

In this reference architecture, a maximum of one Nexus Repository instance is running at a time. Having more than one Nexus Repository failover instance will not work.

Setting Up the Infrastructure

Note

If you plan to use the HA/Resiliency Helm Chart (GitHub, ArtifactHub), you can follow the detailed instructions available in this README file rather than the instructions on this page.

Step 1 - Google Kubernetes Engine Cluster

The first thing you must do is create a project. A project is a container that holds related resources for a Google Cloud solution. In this case, everything we are about to set up will be contained within this new project.

Follow Google's documentation to create a project from the Google Cloud console.

Then, follow Google's documentation to create a GKE cluster.

If you plan to use Google Cloud Secret Manager, you can also enable the Secret Manager add-on following Google Cloud's documentation. Ensure that the GKE node(s) on which Sonatype Nexus Repository will run have appropriate permissions for accessing Secret Manager.

If you plan to use Cloud Logging, enable workload logging when setting up your GKE cluster.

Step 2 - Cloud SQL for PostgreSQL

In this step, you will configure your database for storing Sonatype Nexus Repository configurations and component metadata.

Follow Google's documentation for creating a Cloud SQL for PostgreSQL instance and/or our instructions in the HA GitHub.

When setting up the database, select the project you created when setting up your GKE cluster.

Select the same region as the project you created.

Step 3 - Ingress Controller

The next step is to configure an Ingress Controller to provide reverse proxy, configurable traffic routing, and TLS termination for Kubernetes services.

Follow Google's documentation for setting up an ingress controller in GKE.

Optionally, configure the Ingress Controller to associate a static IP address with the Sonatype Nexus Repository pod. This way, if the Nexus Repository pod restarts, it can still be accessed through the same IP address.

Step 4 - License Management

There are a few options you can use for license management:

Google Cloud Secret Manager and External Secrets Operator - You can use this option whether you're using the HA/Resiliency Helm chart or manually deploying YAML files.

Configure License in Helm Chart - This option is for those using the HA/Resiliency Helm Chart but not using Google Cloud Secret Manager.

Option 1 - Google Cloud Secret Manager and External Secrets Operator (Recommended)

Google Cloud Secret Manager is one of the options for storing secrets like your Sonatype Nexus Repository license and database credentials. In the event of a failover, Secret Manager can retrieve the Nexus Repository license when the new Nexus Repository container starts. This way, your Nexus Repository always starts in Pro mode.

Note

For Helm chart users, depending on the decoding strategy you configured in the license section of your values.yaml, you will need to pass your license file directly as-is (if strategy is null) or first encode it using a base64 encode (if strategy is set to Base64).

externalsecrets:

enabled: false

secretstore:

name: nexus-secret-store

spec:

provider:

# aws:

# service: SecretsManager

# region: us-east-1

# auth:

# jwt:

# serviceAccountRef:

# name: nexus-repository-deployment-sa # Use the same service account name as specified in serviceAccount.name

# Example for Azure

# spec:

# provider:

# azurekv:

# authType: WorkloadIdentity

# vaultUrl: "https://xx-xxxx-xx.vault.azure.net"

# serviceAccountRef:

# name: nexus-repository-deployment-sa # Use the same service account name as specified in serviceAccount.name

secrets:

nexusSecret:

enabled: false

refreshInterval: 1h

providerSecretName: nexus-secret.json

decodingStrategy: null # For Azure set to Base64

database:

refreshInterval: 1h

valueIsJson: false

providerSecretName: dbSecretName # The name of the AWS SecretsManager/Azure KeyVault/etc. secret

dbUserKey: username # The name of the key in the secret that contains your database username

dbPasswordKey: password # The name of the key in the secret that contains your database password

dbHostKey: host # The name of the key in the secret that contains your database host

admin:

refreshInterval: 1h

valueIsJson: false

providerSecretName: adminSecretName # The name of the AWS SecretsManager/Azure KeyVault/etc. secret

adminPasswordKey: "nexusAdminPassword" # The name of the key in the secret that contains your nexus repository admin password

license:

providerSecretName: nexus-repo-license.lic # The name of the AWS SecretsManager/Azure KeyVault/etc. secret that contains your Nexus Repository license

decodingStrategy: null # Can be Base64

refreshInterval: 1hSelect the project you created when setting up your GKE cluster.

Select the same region as the project you created.

Add your license file to Secret Manager using the Google Cloud CLI. The command to add your license will look similar to the following:

gcloud secrets create <name_for_secret> \ --replication-policy="automatic" \ --data-file=<path_of_license_file.lic>

Add the secrets for your database username, password, and host as well as your Nexus Repository admin password.

You can do this manually in Secret Manager you created via the portal.

Follow Google's documentation for adding secrets via the portal.

Locate the external secrets portion of your values.yaml (shown in the code block below) and complete the steps detailed in the Helm chart README

Option 2 - Configure License in Helm Chart

This option is only for those using the HA/Resiliency Helm chart but not Google Cloud Secret Manager.

In the values.yaml, locate the license section as shown below:

license: name: nexus-repo-license.lic licenseSecret: enabled: false file: # Specify the license file name with --set-file license.licenseSecret.file="file_name" helm option fileContentsBase64: your_license_file_contents_in_base_64 mountPath: /var/nexus-repo-licenseChange

license.licenseSecret.enabledtotrue.Do one of the following:

Specify your license file in

license.licenseSecret.filewith--set-file license.licenseSecret.file="file_name"helm option.Put the base64 representation of your license file as the value for

license.licenseSecret.fileContentsBase64.

Step 5 - Cloud Logging via Google Cloud's Operations Suite (Optional, but Recommended)

Tip

If you plan to use the HA/Resiliency Helm chart (GitHub, ArtifactHub) and Cloud Logging via Google Cloud's Operations Suite, you will need to enable workload logging when creating your cluster; however, you do not need to do anything else in this step as the Helm chart will handle it for you.

Why Use Cloud Logging?

As a best practice, we recommend using Cloud Logging through Google Cloud's Operations Suite for externalizing your Nexus Repository logs. When running Nexus Repository on Kubernetes, it is possible for it to run on different nodes in different zones over the course of the same day. In order to be able to access Nexus Repository's logs from nodes in all zones, we recommend that you externalize your logs to Google's Cloud Logging within Google Cloud's Operations Suite.

How to Enable Cloud Logging

Cloud logging for system logs is enabled by default for all new GKE clusters. However, to capture logs from Nexus Repository (i.e., workload logs), you will need to enable this separately when creating your GKE cluster.

Follow Google's documentation for enabling workload logging when creating your GKE cluster.

Enabling workload logging when creating your GKE cluster automatically pushes logs to stdout from all containers in the Sonatype Nexus Repository pod to Cloud Logging.

In addition to the main log file (i.e., nexus.log), Nexus Repository uses side car containers to log the contents of the other log files (request, audit, and task logs) to stdout so that they can be automatically pushed to Cloud Logging.

Step 6 - Persistent Volumes with Google Filestore

Sonatype Nexus Repository requires persistent volumes to store logs and configurations to help populate support zip files. Google Filestore provides a shared location for log storage.

Note that the Helm chart supports both Google Filestore and Persistent Disk; however, we recommend that you use Google Filestore.

During a Nexus Repository instance failover, when the Kubernetes schedule attempts to restart a pod in a different zone from the one in which it previously existed, the pod will not start because the PVC that the Pod is using is bound to a volume (e.g., Persistent Disk) in the original zone. This is primarily because Persistent Disk is specific to zone and cannot be invoked from instances across zones. Hence, Google Filestore is required for persisting the log files in a location that is accessible by all Nexus Repository instances across zones.

When you apply the StatefulSet YAML (or the values.yaml if using the HA/Resiliency Helm Chart), the volumeClaimTemplate defined therein will tell Google Cloud to dynamically provision Google Filestore instances of a specified volume size.

This step requires the GKE Filestore CSI driver. Follow Google's documentation to enable the GKE Filestore CSI driver.

For those using the Helm chart, you will need to make updates to your values.yaml before installing the Helm chart. Details about what to update are included in the Helm chart's README file.

You can confirm that you've set up your cluster correctly by taking the following steps:

Check persistent volume claim (PVC) using a command like the following:

kubectl get pvc -n <nexus-namespace>

Ensure the PVC associated with your Nexus Repository deployment is

Boundand has the correct amount of storage requested.Look for the

VOLUMEcolumn to see the name of the persistent volume it is using.

Check persistent volume using a command like the following:

kubectl describe pv <pv-name>

Verify that the persistent volume is backed by Google Filestore. Look for information about the Google Filestore instance (e.g.,

server,volumeHandle) in the output.

Check the Nexus Pod using a command like the following:

kubectl describe pod <nexus-pod-name> -n <nexus-namespace>

In the Volumes section, confirm that the PVC is mounted correctly within the Nexus container.

Look for the mount path to see where it's mounted inside the container.

Step 7 - Google Cloud Storage

Google Cloud Storage provides your object (blob) storage. When you create your blob stores (via UI or REST API), buckets with your designated name are created automatically. However, if for some reason you wish to create buckets manually, you can use Google's documentation to set up a storage bucket and then begin working with buckets:

Select the project you created when setting up your GKE cluster.

Select the same region as the project you created.

Select the Standard storage class.

Select the zone-redundant storage option.

Restrict it to your virtual network.

Apply YAML Files to Start Your Deployment

Tip

If you are using the HA/Resiliency Helm Chart (GitHub, ArtifactHub), skip these steps and follow the instructions on GitHub for installing the Helm Chart instead. Ensure you update your values.yaml as detailed in the Helm chart's README file.

Warning

The YAML files linked in this section are just examples and cannot be used as-is. You must fill them out with the appropriate information for your deployment to be able to use them.

Step 1 - Create a Kubernetes Namespace

All Kubernetes objects for the Sonatype Nexus Repository need to be in one namespace.

There are two ways to create a Kubernetes namespace: manually using the kubectl command-line tool or through a Namespaces YAML.

Option 1 - Manually Create Namespace

kubectl create namespace <namespace>

Follow the Kubernetes documentation for creating a Kubernetes namespace with the kubectl command-line tool.

You will use the following command to create the namespace:

Option 2 - Use the Namespaces YAML

Fill out a Namespaces YAML with the correct information for your deployment.

Apply your YAML to create a namespace.

Step 2 - Fill Out YAML Files

Use the sample YAML files linked in the Sample YAML Files as a starting point.

Fill out your YAML files with the appropriate information for your deployment.

Step 3 - Apply the YAML Files

You will now apply your YAML Files in the order below:

Service Account YAML

Logback Tasklogfile Override YAML

Services YAML

Storage Class YAML

StatefulSet YAML

Ingress YAML

Explanations of what each file does can be found in the Sample YAML Files reference below.

Sample YAML Files

Warning

The YAML files linked in this section are just examples and cannot be used as-is. You must fill them out with the appropriate information for your deployment to be able to use them.

* If you are using the HA/Resiliency Helm Chart (GitHub, ArtifactHub), do not use this table. Follow the instructions on GitHub for installing the Helm chart instead.

File | What it Does | Do I Need This File?* |

|---|---|---|

Creates a namespace into which to install the Kubernetes objects for Sonatype Nexus Repository | Needed only if you are using the YAML method for creating a namespace. | |

Create a Kubernetes service account | Yes | |

Changes Nexus Repository's default behavior of sending task logs to separate files; the task files will now exist at startup rather than only as the tasks run | Yes | |

Creates a Kubernetes service through which the Nexus Repository application HTTP port (i.e., 8081) can be reached | Yes | |

Create a storage class for persistent volumes | Yes | |

Starts your Sonatype Nexus Repository pods; uses secrets for mounting volumes | Needed only if you are using Google Cloud Secret Manager. | |

Ingress YAMLYAML | Exposes the Nexus Repository service externally so that you can communicate with the pods | Yes |

gcp-values-eso-enabled YAML or gcp-values-eso-disabledgcp-values-eso-disabled YAML | Customized values.yaml files provided as an example of how to update values.yaml | Not for use; provided as an example of how to update a values.yaml |

Note

Always run your YAML files in the order specified above.

The resources created by these YAMLs are not in the default namespace.

The sample YAMLs are set up to disable the

defaultblob stores and repositories on all instances.